Google Cloud simplifies machine learning project setup with minimal infrastructure hassle. It handles the boring stuff—server management and scaling—so data scientists focus on actual model building. The platform plays nice with TensorFlow, PyTorch, and other frameworks while offering pre-trained APIs for common tasks. Its pay-as-you-go model works for projects big and small. More power awaits beneath the surface.

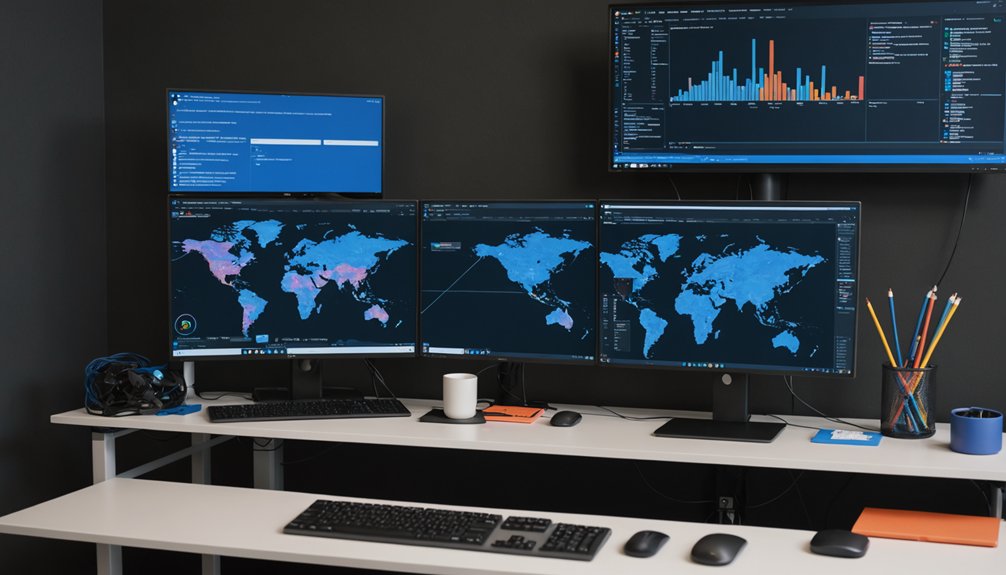

Nearly every tech giant offers machine learning tools these days, but Google Cloud's suite stands out in a crowded field. It's not just another platform with flashy features and empty promises. Google delivers a thorough ecosystem of machine learning options that cater to everyone from curious beginners to hardcore data scientists. Their Machine Learning Engine manages the heavy lifting so developers can focus on building models, not babysitting infrastructure.

The platform integrates seamlessly with Cloud Storage and Data Flow. No surprise there. Google's always been good at making their products play nice together. What's impressive is how they've streamlined the whole process. Training models and running predictions? Simple. Using popular frameworks like TensorFlow and scikit-learn? No problem. The system automatically provisions resources as needed, scaling up or down based on demand. That's right—no more guessing how many servers you'll need. The data preprocessing stage is crucial for building effective AI models, just as important as the model selection itself. The platform supports REST APIs for seamless integration with external applications and services.

Server-side pre-processing is another game-changer. Data preparation eats up most of a data scientist's time, and Google knows it. Their tools make this typically tedious process more efficient. Less time cleaning data means more time building cool stuff.

Gone are the days of data cleaning drudgery—Google's server-side tools let scientists create instead of just curate.

And the pricing? Pay only for what you use. Revolutionary? No. Practical? Absolutely.

The pre-trained APIs are where things get interesting. Vision API handles image analysis tasks like face detection and object recognition. It's spooky how good it is. The Natural Language API breaks down text like a literature professor on caffeine. Both save countless development hours.

Google Cloud Machine Learning isn't perfect. Nothing is. But it offers a solid foundation for projects of any size. The platform supports multiple frameworks, integrates with essential services, and scales without manual intervention. The course offers comprehensive training on both predictive and generative AI projects to help you maximize these capabilities. Google ML applications extend to real-world problems including optical character recognition, medical diagnosis, and weather prediction.

For teams looking to implement machine learning without drowning in infrastructure concerns, Google's offering deserves serious consideration. It's powerful, versatile, and surprisingly user-friendly. In the machine learning arms race, Google's bringing serious firepower.

Frequently Asked Questions

How Do I Optimize Google Cloud Costs for ML Training?

Optimizing cloud costs for ML training isn't rocket science.

Implement autoscaling to adjust resources based on actual workload. Rightsize those VMs—no point paying for compute power you're not using. Preemptible VMs can slash costs by 80%.

Set budget alerts before things get out of hand. Regular cost reviews reveal waste.

And don't forget data management—storage costs add up fast. Smart governance saves money. Period.

What's the Difference Between Vertex AI and AI Platform?

Vertex AI is Google's newer, unified ML platform that streamlines the entire workflow.

AI Platform? The older, more fragmented service.

Vertex integrates everything—data prep, training, deployment—while AI Platform requires manual stitching between services.

Sure, they both scale, but Vertex adds AutoML capabilities for the ML-challenged.

It's basically the difference between driving a Tesla versus assembling a car from parts.

Same destination, different journeys.

Can I Integrate Google Cloud ML Workflows With On-Premise Infrastructure?

Yes. Google Cloud ML workflows can integrate with on-premise infrastructure through several methods.

Application Integration and Workflows services connect cloud and local systems seamlessly. APIs, connectors, and service accounts with private keys handle authentication.

Data moves between environments via batch processes or real-time streams. It's not always pretty—different data structures create challenges—but the technical pathways exist.

Many organizations run hybrid setups this way. Pretty standard stuff.

How Secure Is Data Stored in Google Cloud for ML?

Google Cloud offers robust security for ML data through automatic encryption at rest. Users can choose between Google-managed or their own encryption keys.

Data gets split into chunks, each with its own key—pretty clever. IAM controls regulate access, while DLP services help identify sensitive information.

It's not perfect—no system is—but Google's security measures are extensive. They've invested billions in this stuff, after all.

Which Google Cloud Regions Offer the Best ML Performance?

For ML workloads, Google's us-central1 (Iowa) and us-east4 (Virginia) regions typically offer superior performance.

They're packed with the latest TPUs and GPUs.

Europe? Try europe-west4 (Netherlands).

Asia users get solid results with asia-east1 (Taiwan).

Performance varies though. Some regions have specialized hardware configurations.

Latency matters too—choose regions near your data sources and users.

Cost differences exist. Google occasionally shuffles their top-performing infrastructure around.